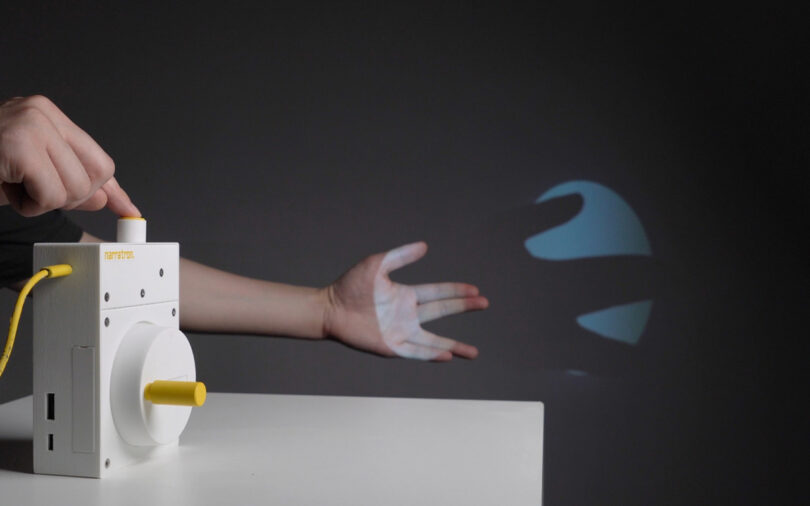

ChatGPT, Stable Diffusion, Midjourney, AI-enhanced smart home device, and numerous systems sometimes completely invisible to us are already altering how we work, play, and interact with the world. But few would conceive the burgeoning transformative technology taking on the form of something as humble as the Narratron, a small projector with a hand crank conceived to capture hand shadow puppetry and transform the poses into fairy tales narrated by artificial intelligence.

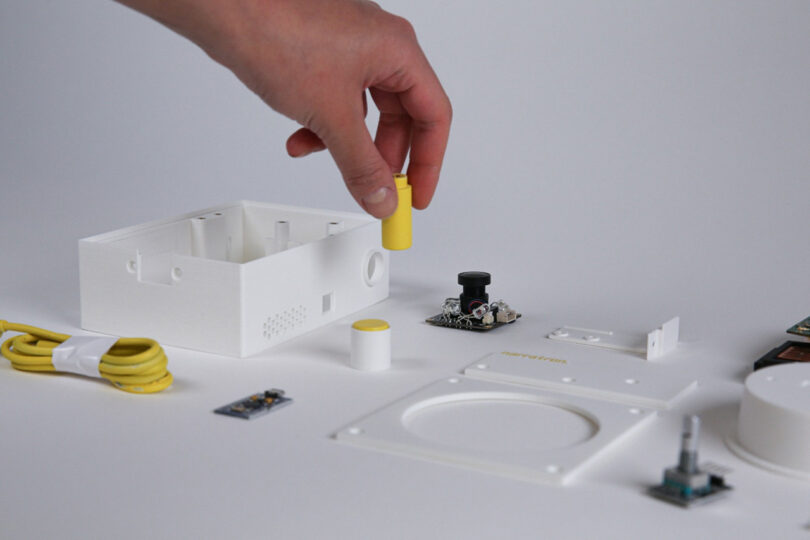

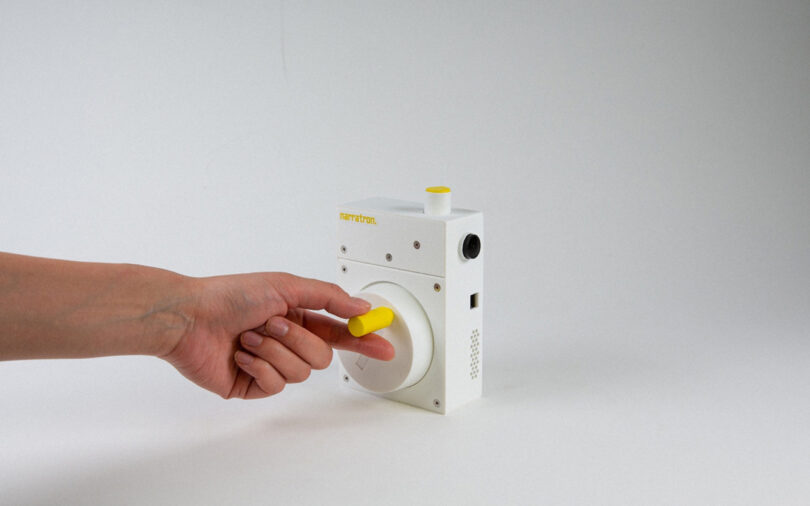

Designed by Aria Xiying Bao and Yubo Zhao at the MIT’s School of Architecture and Planning, the Narratron seems almost an anachronism at first glance, a camera/projector realized with toy-like simplicity. The Narratron’s minimalist industrial design is intentionally uncomplicated to invite an unobtrusively tactile experience not dissimilar to those earliest days in the company of our toys. Except this toy is capable of turning shadow poses created with your hand into wondrously detailed stories revealed one hand crank at a time.

The spinning of the knob is a nostalgic detail adding an intuitive tactile method to further the story, with each rotation of the knob revealing a new chapter of the narrative.

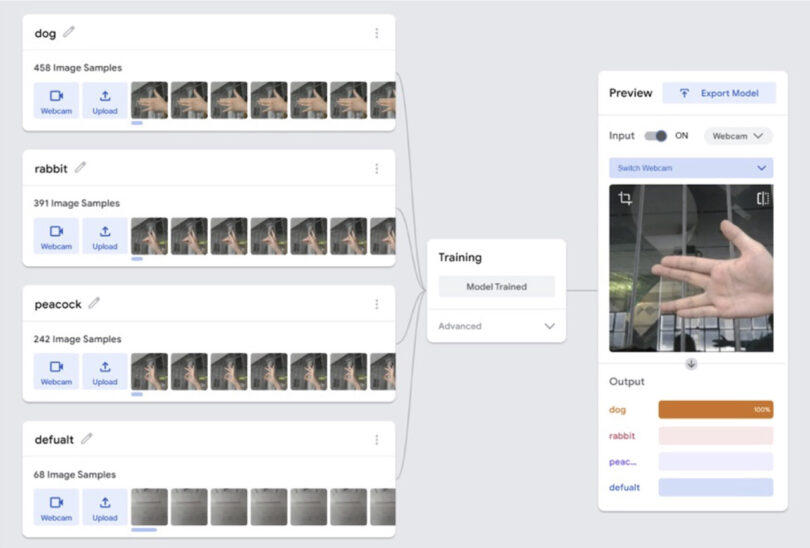

The Narratron is powered by ChatGPT’s language model, an AI system already known to fabricate fanciful tales on its own, or in machine learning parlance, “hallucinate.” Bao and Zhao’s contraption uses AI’s propensity to fabricate stories as a feature rather than a bug, churning out AI-generated stories complete with detailed plot lines, dialogue, and descriptive elements made up to entertain rather than steer users away from the facts. It does all this using Stable Diffusion for the imagery and the React Speech Kit to conjure a convincing AI narrator to tell the made up tale.

The process of using the Narratron is also a bit of a throwback in itself. First the user snaps poses and snaps a hand shadow pose like an old camera. Each snapshot is then analyzed by an algorithm trained to translate the hand poses into an animal keyword, an animal that then becomes the foundation of an original frame-by-frame immersive yarn embellished with voice acting, sound effects, and music revealed with every turn of the Narratron’s crank dial.

The designers included the vintage movie projector-inspired detail as an aesthetic touch and homage to classic movie film cameras, a clean and uncluttered design intended to connect user with device without distractions, and help plunge viewers into the immersive stories summoned by artificial intelligence.

It’s no Barbie or Oppenheimer, but in some ways what the Narratron does in its elementary form may prove prescient of a future where algorithms operate not merely as recommendation mediums, but become capable of actively synthesizing unique entertainment in realtime and in reaction across various modalities of the visual, auditory, tactile, and using textual I/O, essentially turning you into an active participant in the movie making process. Fascinating or horrifying, we’ve yet to determine.